Online Course

NRSG 790 - Methods for Research and Evidence-Based Practice

Module 2: Research Overview and Design

Reliability and Validity

In order for assessments to be sound, they must be free of bias and distortion. Reliability and validity are two concepts that are important for defining and measuring bias and distortion.

Reliability refers to the extent to which assessments are consistent. Another way to think of reliability is to imagine a kitchen scale. If you weigh five pounds of potatoes in the morning, and the scale is reliable, the same scale should register five pounds for the potatoes an hour later. Likewise, instruments such as classroom tests and national standardized exams should be reliable – it should not make any difference whether a student takes the assessment in the morning or afternoon; one day or the next.

Validity refers to the accuracy of an assessment -- whether or not it measures what it is supposed to measure. Even if a test is reliable, it may not provide a valid measure. Let’s imagine a bathroom scale that consistently tells you that you weigh 130 pounds. The reliability (consistency) of this scale is very good, but it is not accurate (valid) because you actually weigh 145 pounds. Also, if a test is valid, it is almost always reliable.

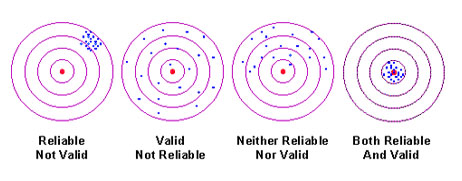

The above situation shows four possible outcomes:

- In the first one, you are hitting the target consistently, but you are missing the center. You are consistently measuring the wrong value – reliable but not valid

- In the second you are spreading you hits across the target randomly and getting some right for the group in the center but not all – valid but not reliable

- In the third you are you are spreading your hits in a wide area but missing the center – neither reliable or valid

- Last, you consistently hit the center – reliable and valid

Factors Affecting Reliability & Validity

- Manner in which the measure is scored

- Example: Having an essay test in English scored by a non English speaking person rather than an English speaking scorer.

- Characteristics of the measure itself

- Example: Measuring blood pressure by asking the subject whether or not they think their blood pressure is normal rather than using a recently calibrated blood pressure cuff.

- Physical and/or emotional state of the individual at the time of measurement

- Example: Asking a mother who is late picking up her toddler from nursery school to complete a 10 page satisfaction questionnaire before leaving the exam room as compared to a mother who has no pressing need to leave in a hurry.

- Properties of the situation in which the measure is administered

- Example: Interviewing a subject while standing in the check out line in a grocery store as compared to interviewing a subject in a private room with comfortable seating and a quiet atmosphere.

- If the attribute being measured does not change , a reliable tool or method should produce stable observations and scores

- Example: Responses from an environmental survey mailed to residents living in Baltimore City that is forwarded to a respondent who has relocated to a rural area of a different state as compared to respondents who are still living in Baltimore City.

- Since one can never directly compare an observed score with a true score, the true score is estimated by obtaining and comparing multiple observations about the attribute of interest

- Example: If the interest is in the activities of daily living skills of a geriatric patient living in an assisted living facility observing the patient over time performing a variety of skills as compared to observing the patient on only one occasion.

Reliability and validity of a tool or method should be assessed each time it is used to ascertain that it is performing as planned.

Estimating Reliability And Validity

| Type | Procedure | Reliability Index | Appropriate to Use |

|---|---|---|---|

| Test Retest/Repeated Measures:Consistency of performance a measure elicits from one group of subjects on two separate test occasions | Test administered under standardized conditions to a single group of subjects representative of the group for which the measure was designed. Two weeks later, same test given under same conditions to same group of subjects. Correlation coefficient (rxy) determined | Coefficient of stability, reflects extent to which the measure rank orders subjects the same on two separate occasions. Closer coefficient (rxy ) is to 1.00 the more stable the measure | For tools that measure characteristics relatively stable over time & for clinical tools that produce only 1 score that can be given 2 or more times. |

| Parallel Form:Consistency of performance alternate forms of a test elicit from one group of subjects on one testing occasion. | Two tests are parallel if: 1) constructed using same objectives and procedures; 2) have approximately = means;3) equal correlation with a third variable; 4) have equal means and standard deviations. Two forms given to one group on same occasion or on two separate occasions. Correlation between 2 sets of scores determined using rxy | Values above 0.80 provide evidence two forms can be used interchangeably. If both forms given same time rxy reflects form equivalence; if given 2 times reflects stability as well. | Whenever 2 or more tools are available, is the preferred method. |

| Internal Consistency: Consistency of performance of a group of individuals across the items on a single test. | Test administered under standardized conditions to representative group on one occasion. Alpha coefficient reflects extent to which performance on any one item is a good indicator of performance on any other item. | Alpha coefficient is preferred because it provides a single value for any given data set; is equal in value to the mean of the distribution of all possible split –half coefficients for data set. | For interviews, multi-item scales. |

| Interrater: consistency of performance among raters or judges (or degree of agreement between them) in assigning scores to the objects, responses, or observational data being judged. | Two or more competent raters score responses to a set of subjective items . When two raters employed, rxy is used to determine the degree of agreement between them. If more than two raters alpha may be used. Kappa coefficient used when aim is to compare ratings of two judges classifying patients into diagnostic categories. | A coefficient =0 indicates complete lack of agreement; coefficient = 1.00 indicates complete agreement. Agreement means the relative ordering of scores assigned by raters. | Raters often trained to a high degree of agreement in scoring prior to data collection. Interrater reliability is especially useful with behavioral observations &/or proxy judgments of a patients state. |

| Intrarater: Consistency with which one rater assigns scores to a single set of test item responses on two occasions. | One rater assigns scores to a subjective measure using a fixed scale and without recording answers on the scoring sheet. About 2 weeks later, responses are shuffled and rescored using the same procedure as on occasion 1. Agreement between the two scorings is assessed using rxy. | Zero value for rxy is interpreted as inconsistency; a value of 1.00 as complete consistency. | In determining extent to which an individual applies the same criteria to rate responses on different occasions especially with subjective measures; enables one to determine the degree to which ratings are influenced by temporal factors. |

Adapted from Waltz, C F, Strickland, OL, Lenz, ER. (2005) Measurement in nursing and health research (3rd ed) 138-145. New York: Springer Publishing

Estimating Validity

| Type | Procedure | Appropriate to Use |

|---|---|---|

| Content: Determine how subject performs at present in a domain of situations the tool intends to represent | Review of objectives and items on tool by panel of experts and content validity index (CVI) or percent agreement determined. | Where performance on a small set of items in one tool serves as lone indicator of how well content domain is represented |

| Construct: Infer degree to which subject possesses some hypothetical trait or quality presumed to be reflected in performance on tool. | Comparison of subject scores on tool with scores of subjects known to have high and low amounts of attribute using D index, factor analysis, MTMM | In cases where it is believed that individual differences in performance on the tool reflect differences in the trait about which inference is being made |

| Criterion-related: Forecast subject’s future or present standing on variable of particular significance that is different from the tool | Correlation of scores on the tool with present or future scores on a second measure. ROC curves to examine predictive value of diagnostic test. | For tool used to predict present or future performance. |

Adapted from Waltz,CF, Strickland, OF,& Lenz, ER.(2005) Measurement in nursing and health research (3rd ed) 138-145. New York: Springer Publishing

Try This

Reflect on the following scenarios.

- You are asked about the reliability coefficient on a recent standardized test. The coefficient was reported as .89. How would you explain that .89 is an acceptable coefficient?

- You are looking for an assessment instrument to measure reading ability. They have narrowed the selection to two possibilities -- Test A provides data indicating that it has high validity, but there is no information about its reliability. Test B provides data indicating that it has high reliability, but there is no information about its validity. Which test would you recommend? Why?

This website is maintained by the University of Maryland School of Nursing (UMSON) Office of Learning Technologies. The UMSON logo and all other contents of this website are the sole property of UMSON and may not be used for any purpose without prior written consent. Links to other websites do not constitute or imply an endorsement of those sites, their content, or their products and services. Please send comments, corrections, and link improvements to nrsonline@umaryland.edu.